Gartner Data and Analytics 2023 Conference Overview

My experience peering into the mind of Gartner and judging the top trends impacting enterprise analytics and business intelligence in 2023 and beyond.

The Gartner Data and Analytics Conference 2023 went off without a hitch this week, as top data leaders from across the industry came together to drink from the Gartner fountain and take stock of the top analytics trends for 2023. I had a blast and met a ton of cool people, saw some awesome presentations and product demos, and even met a few Super Data Brothers superfans down in sunny Orlando. In this blog post I’ll summarize the top trends and tools I saw at Gartner and give you my thoughts on where things are headed.

Want a video recap of Gartner Data and Analytics 2023? Check out this episode of Super Data Show

Gartner Analytics Trend #1: Lack of data ROI

This was a major theme across the whole conference - after a decade of ridiculously low interest rates and tons of data hype made investing in data easy, we find ourselves suddenly asked to show genuine ROI for the first time in ages. For a long time it almost felt as if data was a self-justifying investment. Those days are over and Gartner delivered that message forcefully. I’ve heard it lots of other places too.

Deep data ROI

Gartner distinguishes what they call ‘financial metrics’ from ‘financial influencing metrics’ as a way to reflect the deep value of data investments. Financial metrics are the things you immediately think of to justify spend on data - increased revenue and productivity, reduced cost. These things are great! But Gartner argues that the value of S&P 500 companies is now largely driven by long-term strategic financial influencing factors rather than traditional tactical factors - things like the value of company grand, innovation and know-how. They argue that data teams should focus on delivering long-term strategic value over short term tactical value.

I agree with Gartner - sort of. Data teams should strive to tie their work to long-term strategic sources of value. But in reality I think there are tons of data teams who struggle to tie their work to short-term tactical value. If we do have a significant recession coming CFOs are going to make short-term, tactical decisions about what to cut. They are more than willing to sacrifice ‘the brand’ to make the balance sheet and cashflow work this quarter. The incentives of our entire financial system practically mandate that they do so. Being able to show data’s contributions to classic metrics like revenue is probably your best shield against short-term thinking and short-term cuts.

If you can’t show how you increase revenue and productivity and reduce cost and risk with your data practice, figure it out immediately! Then by all means, move on to showing long term value.

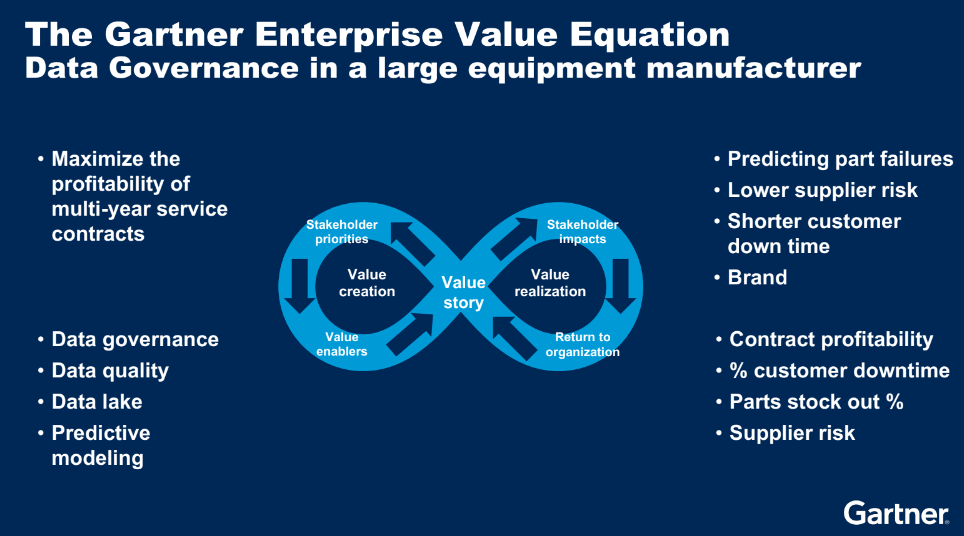

Gartner’s Enterprise Value Equation

The Gartner Enterprise Value Equation is their method for building long-term, financial influencing value for data teams. Here’s an example they shared from the conference:

There are two things I really like about this:

It’s circular - you’ve seen me argue over and over that the future of business intelligence is not a linear supply chain of insight, but something more circulatory that promotes the good and prunes the bad. The Gartner Enterprise Value Equation is exactly that - a system that seeks to build virtuous feedback loops between data and business teams.

It’s very focused on identifying, delivering and measuring value to the business in business terms. Too many data teams measure value in terms of their own assets - number of dashboards, count of monthly users, amount of raw data processed - which ultimately have no relationship to business value. By first identifying the desired business goal and focusing all your efforts on that, you can build a much more valuable data practice.

Ultimately this is one of many systems for building out a successful data and analytics practice - you are free to shop around for what works. But a far as it goes, the Gartner Enterprise Value Equation has the solid foundation of business value and positive feedback loops that I endorse.

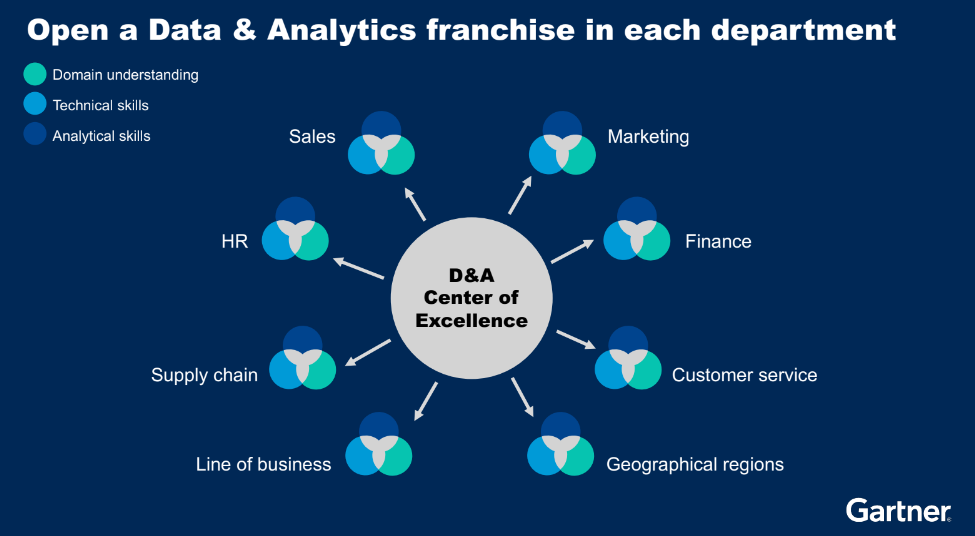

Gartner Analytics Trend #2: The franchise model

The overall structure and operating model of enterprise analytics has become a hot topic, just another sign that the Tableau era is over. Zhamak Dehghani’s Data Mesh is my personal favorite, but there’s also Data Fabric and Data Vault. You can now add ‘The Franchise Model’ of analytics to the list. It has a lot in common with these other approaches.

The Gartner Franchise Model of Analytics is designed to allow your business units autonomy to run their own tailored analytics programs while maintaining a centrally provision platform, suite of tools and operating model. The idea is to bring back governance, data quality and some degree of standards while maintaining the speed and agility that accompany business-lead analytics.

The analogy here is to any franchise style business - McDonald’s, for example. Your D&A Center of Excellence is like McDonald’s corporate. Just as McDonalds corporate provides the machines, products, advertising and operating instructions, your COE provides the underlying data platform and infrastructure, centralized metrics and analytics playbooks. Each business unit is then a franchise and brings domain knowledge and their own unique way of looking at and deriving value from data.

If you choose the right tooling for your centralized platform, you can easily provide the bedrock technology for a distributed data practice. Features like headless metric stores, composability and analytics-as-code are key to unlocking the Gartner Franchise Model of Analytics in my opinion. An underlying platform that has these capabilities will be use case agnostic, meaning it will no longer matter that Supply Chain likes Power BI while Marketing likes Tableau while your data scientists like to code their own python. The underlying platform can handle connections to all these endpoints while maintaining consistency of data and UX.

Gartner Analytics Trend #3: Return of data governance

Three big trends in the BI space drive what I call ‘The Return of Data Governance’ in 2023. It’s not that governance ever went away, but it was definitely deprioritized during the 2010s in favor of speed and data analyst agility. In essence, the ability to quickly pump out dashboards trumped data governance concerns. However we’ve reached a point where many companies have 2500 Snowflake views driving 2500 Tableau dashboards and simply can’t manage it all, let alone maintain high quality data. That’s where modern governance techniques come in.

In order to be successful this time around we need some new approaches. Luckily there are a ton of smart people trying to bring back governance without killing agility or innovation. In that vein, the top three trends driving modern data governance as I see it are:

Semantic layers and data catalogs

This is the modern incarnation of an old idea - that there should be a layer that sits between the data and the analytics that allows for high quality, consistent metrics across all downstream uses. It was in fact mandatory to build a semantic layer in legacy BI tools like Cognos, but fell out of favor in the Tableau era. Now they’re back, but they have some very interesting changes from ye olden days:

Open metrics layer: These tools work with any front end - BI tools, AI/ML or data apps. They are not proprietary to a single use or company.

APIs, SQL and more: At a minimum these tools allow API and SQL access, but they often can interpret DAX, MDX and other data languages as well.

Catalog + metrics in one place: Many of them offer catalog and search capabilities alongside real-time metrics query.

Designed as a graph: Semantic layers are really knowledge graphs, and modern tools are designed this way from the ground up..

Some examples of tools that do some or all of this are GoodData, AtScale, Illumex and Data.World.

Composability

Composability is a term that has yet to permeate the data world, but it’s quickly picking up steam. Composability mean modularity - a composable data platform is made up of components that can be created, altered, re-arranged and combined in unique ways, either by the IT team or - more powerfully - by the business.

Composability enhances governance by allowing the IT team to maintain a library of analytics components that can be used by anyone downstream. For example, you may maintain composable metrics, data catalog and visualization capabilities centrally. An individual business unit then chooses to use the metrics and data catalog but keep their own visualization tool. By choosing a composable data platform you increase adoption by offering a better deal than the traditional ‘all or nothing’ offer from central IT.

As far as I know GoodData is by far the leader in the composable BI space.

DataOps and analytics-as-code

The final piece of the modern governance puzzle is DataOps and analytics-as-code. These concepts come to us from the software development world, where they’ve already completely transformed the industry. By moving away from UI based data platform management, you dramatically increase speed and scale. Code is just more efficient and easier to manage once you embrace it. Some advantages of this approach:

Super fast deployments: Modern software deployment and testing techniques eliminate downtime and allow you to test and push changes to prod with no downtime and at tremendous speed. Composability ensures that you’re only updating a single component at a time, which reduces risk.

Scale rapidly: Using declarative code, you can build out an entire analytics environment very quickly, and update it even faster. Because you can parameterize the environment, it becomes incredible responsive to change. Things that used to take weeks (change in org structure anyone?!) can be done in a day when properly architected.

Code and change management: Speaking of enhanced governace, by using industry standard code management techniques you have the ultimate in data platform governance. You’ll always know who changed what code, when and why, and be able to roll back easily.

Easy integration: Analytics-as-code platforms have declarative APIs and SDKs that make bi-directional integration between your data platform and downstream AI/ML and data apps much easier. Imagine a single python script that imports data, transforms it, provisions a BI environment, creates the dashboards and applies security based on a set of parameters in the data - this is possible!

This is another area where GoodData has invested heavily as an end-to-end data/BI platform. Other interesting players are dataops.live for data engineering and Nextdata for data mesh implementation.

What about the 2023 BI Magic Quadrant?

One puzzling thing about the conference was that the 2023 Magic Quadrant for Analytics and Business Intelligence was not released. I’m not sure why - this was the obvious time to share this year’s update. Anyway, they did discuss the top trends impacting the MQ in its absence.

We’ve already discussed governance, headless (semantics) and composability at length above. Consumer design focus really represents a shift in the front-end UX away from data analysts and towards data consumers. This is a trend I wholeheartedly agree with, as you can see here:

Another interesting thing they shared was potential changes coming to the structure of the BI MQ itself. They’ve considered combining it with the Data Science and Machine Learning MQ as the capabilities of these categories are bleeding into one another. They’ve also considered splitting analytics front end and analytics platform into two separate MQs.

Of these two, I support the second. With the rise of ‘The Franchise Model’ and the Return of Governance, comprehensive platform capabilities are rapidly becoming more important than having the prettiest dashboard. The current BI MQ is really tailored to the Tableau era and is heavily over-weighting the dashboard building experience for data analysts. I believe Gartner should either split these into separate categories, or at the very least re-weight the current MQ to give more credence to metrics layers, composability and platform capabilities.

I went into this conference believing a significant MQ shakeup is coming, and the perspective Gartner shared reinforced it. I wouldn’t expect a huge change this year, but 2024 feels like a big one to me.

One last thing on the MQ presentation - while Gartner didn’t say ‘Tableau is dead,’ they did mention that it’s under enormous pressure from Power BI and too expensive at scale. Being the best viz tool is not going to be sufficient in the 2020s I suspect.

Final thoughts

This was my first time at Gartner, and I had a blast. As someone from Michigan, going to Orlando in March is a default win. I know people have mixed feelings about Gartner, and I’ve certainly had my share of grievances over the years. But attending the conference gave me a different perspective and more appreciation for their research and what they bring to their clients. Unlike most other data conferences, this really was driven by Gartner analysts and customers as opposed to vendors and it showed.

Overall I was happily surprised by Gartner’s evolving perspective on analytics. I have been preaching the critical importance of analytics practice design, composability and metrics governance for years. During most of that time it felt like Gartner was on a different wavelength, but we seem to be coming more into alignment. My biggest takeaway for you is that it’s time to start thinking big picture again about your data practice design, embrace the modern data platform approach to analytics and think about how you can partner with the business to deliver real value, not just dashboards.